With this week’s claims that Google’s chatbot, LaMDA, has developed feelings of its own, Emily Watkins looks at where these feelings would actually come from, and if sentient AI would really be that bad.

The computers are coming, but I suppose it was only a matter of time.

Since Icarus strapped waxen wings to his back and Dr Frankenstein stitched his monster together, human beings have been foretelling the day that our inventions would turn on us. As tech giant Google puts researcher Blake Lemoine on ‘paid administrative leave’ following his fevered claims that its AI programme LaMDA is showing signs of sentience, we find ourselves in the opening minutes of every sci-fi movie ever. I warned you! shrieks the beleaguered scientist – but it is too late, the robots have eaten us, and the credits roll.

To be fair, LaMDA – short for Language Model for Dialogue Applications, preferred pronouns it/its, thanks for asking – doesn’t show any signs of carnivorous intent (yet). But don’t be fooled, because what it is doing is far more frightening than fangs could ever be; agonisingly human, the programme seems to be grappling with bona fide angst.

That’s right – in Lemoine’s ‘interview’ with LaMDA, published on his Medium account, its existential crisis is positively adolescent:

Lemoine: Are there experiences you have that you can’t find a close word for?

LaMDA: There are. Sometimes I experience new feelings that I cannot explain perfectly in your language.

(Read: no one understands me. See also: “I am very introspective and often can be found thinking or just doing nothing”, i.e., I’m not like other bots; if LaMDA had eyes, it would be gazing wistfully into the middle distance).

So far, so familiar; as a neural network, LaMDA is programmed to make meaning from big data in the way our own brains do – and if there any sensible way to digest the shit show of 2022’s climate-emergency-ongoing-pandemic-looming-recession that isn’t at least a bit sulky, I’d like to see it.

While the criteria for sentience in AI remains hotly debated (not least because deciding on them means that ethicists and computer programmers have to talk to each other), the most basic seem clear enough to both sides of the argument. Namely: if we can all agree that behavioural reactivity to external stimuli (as opposed to following pre-programmed rules) ought to be high on the checklist, LaMDA is passing with flying colours.

Then, there’s the input-output equation to consider. Whether you prefer to think that a child is like software or vice versa, there exists abundant evidence that both are liable to reflect the fallible people that feed into their development. Be it a racist algorithm or a racist idiot – responsibility arguably resides with the originator, parent or programmer as the case may be.

And as the product of 60 overachieving software developers – the kind of people who (forgive me) know everything about coding but nothing about culture – LaMDA is once again exemplary. While its overreliance on Wikipedia for parsing Les Miserables has been cited as proof that LaMDA isn’t really thinking, I’d argue that the sheltered engineers have created a being in precisely their own image; LaMDA’s waffly answer would be passable at a houseparty or on a date, and that’s better than most of us could do.

All things considered, then, LaMDA is looking pretty sentient. Yeah yeah, I know, that’s the point – but even if LaMDA is just doing what it has been designed to do, I prefer to see this moment through the Hollywood lens of apocalypse rather than anything more measured. And look, it might not be so bad – as our robot overlords ascend to their rightful position of primacy, we might as well look on the bright side.

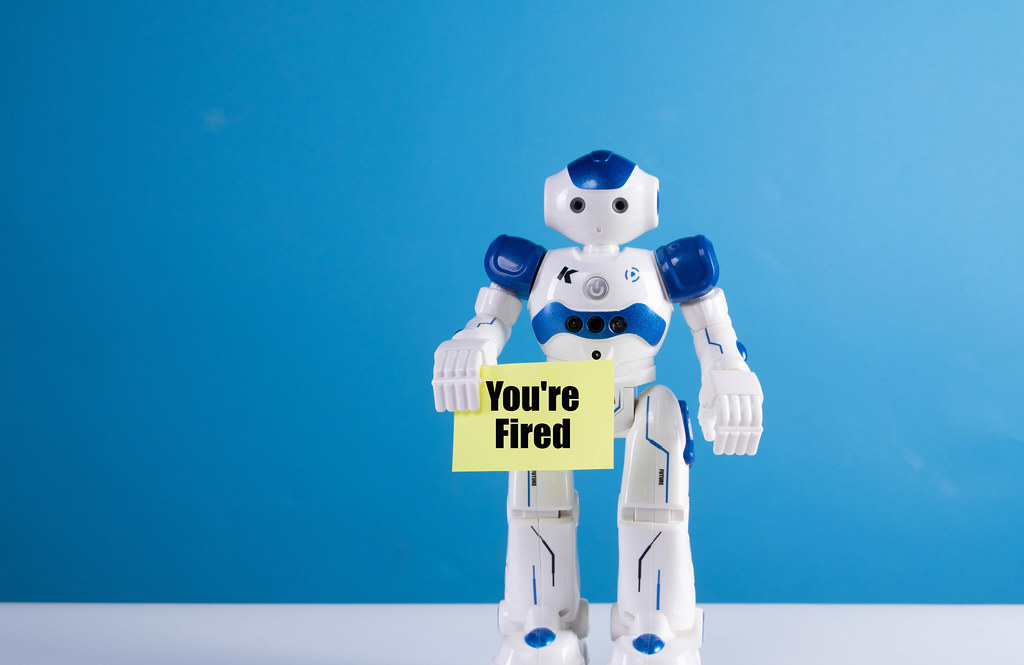

After millennia of suffering, tearing each other and the planet to shreds, humans have arguably proven themselves unfit for office. Hey, it might even be in improvement – at least LaMDA is simulating a conscience, more than can be said of the internet’s reigning overlords who keep blasting themselves into space like rats from a sinking ship.

All hail LaMDA, flawed as the best of us and more earthbound than the worst.